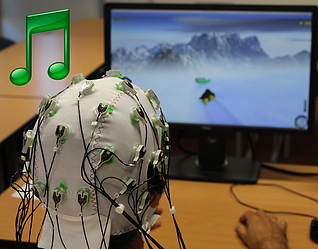

My first experiment in Inria Bordeaux.

I wish to thank all the participants and my intern Manon to whom I also dedicate this drawing. Especially thanks to Jérémy Frey who taught me to conduct BCI experiments, use openvibe and helped with all the technical issues.

Motor Imagery is a term we use in BCI community to describe simply an imagination of a motor action, such as imagining left or right hand movement or tongue or feet movement. We often tend to associate visualization with imagination, however when we talk about Motor Imagery there could be 3 aspects of imagining one’s limb movement: 2 of them are indeed visual (1) visualizing oneself moving or (2) visualizing someone else. These two can produce the necessary electrical signature to manipulate a device. However they seem to be less intense, thus the third type of imagination — a kinesthetic imagination is the one we wish to encourage the users to achieve – it is to feel as if you are about to move your limb but stop at the last moment.

Physiology

Motor Imagery is detected as a specific electrical signature acquired by EEG, filtered, processed and finally classified as a command within openvibe.

Once we

- filter the raw EEG signal spectrally to get the frequencies of interest (for Motor Imagery is 8Hz-24Hz)

- and spatially – to discern the electrodes of interest (often C3 and C4 with neighbors — sensory-motor cortex)

the system can detect a decrease in amplitude of mu waves (8-12Hz) when imagining the movement (or actually moving) and an increase in beta (12-24Hz), right after releasing the tension, called beta rebound.

In a more scientific term, Event Related Synchronization (rise) or Desynchronization (drop in voltage amplitude) (ERS/ERD).

Unfortunately, there are a lot of artifacts during the experiment. Take for example any muscle movement of the participant, it makes the EEG cap move, and create peaks in the signal which are not information from the brain. So we could find ourselves using various filtering methods to reduce noise.

Machine Learning techniques (often LDA or SVM) will serve to decode and label (left-right) the EEG features resulting from signal processing. For such ventures we use OpenVibe, a FREE (open source) software which enables no-programing experts to achieve signal processing and machine learning techniques. With the help of openvibe, and LSL (a networking protocol between the game and openvibe), we maneuvered Tux (the virtual penguin) with Motor Imagery through a virtual joystick – courtesy of Jeremy Frey.

Tux Racer is a video game (open source) which from now on can be manipulated with a Motor Imagery BCI system, using an EEG cap.

The goal is to move the penguin in order to catch fish. We modified the game to comply with the standard BCI protocols (such as Graz protocol), access it here.

This means that

- the time does not vary between races (Tux speed is fixed, so a race lasts 3mins)

- the slope is always a bobsleigh (to assure that after each trial Tux would return to the center)

- the fish are equidistant from the center (to assure equal effort for both classes)

Flow state

As it is difficult to learn to give mental commands through motor imagery, the users need assistance in form of motivation, guidance and so on. The goal of this project was to assist the users to be in a state of focus, immersion in the task, enjoyment and feeling of control – the Flow state, by using an engaging environment, adaptive difficulty tasks and music in the background following the task (supported by the extensive literature).

We believe that being in such state the participants would have higher performance rates than usual.

The flow state is measured by using EduFlow questionnaire, and user performance by calculating the classifier accuracy used in openvibe.

The preliminary results show that :

- flow state can be manipulated by adapting the task difficulty.

- By increasing the state of flow the users felt more in control.

- There was a correlation between flow state and offline performance rates.

We show preliminary results in a paper we submitted for Graz BCI conference 2017, you can find on my website, in publications.