Let’s say you want to record the position of a ball or whatever gameObject you use in Unity.

Or if you are using VR — you wish to stream the position/rotation of the head and controllers while in VR.

Also, at the same time you are measuring some physiology, e.g., the galvanic skin response (GSR) or EEG.

During the experiment, you have certain triggers/events, also called Markers, that you also want to keep track of…

You wish to sync everything together? Well, my advice is use Lab Streaming Layer (LSL)

Why LSL?

It is easy to implement in many languages and easy to use.

It can sync many data automatically, meant for physiological recording.

It handles multiple machines at the same time with high precision, so one could sync even ERPs.

And no need for ip addresses anymore when communicating between machines.

It works on Local Network as well as WAN

(I advise you use a local router or hub to avoid interference)

Most importantly, when using LSL, make sure you have DEACTIVATED FIREWALL for Unity and Openvibe and other apps in use!!!

1. For offline analysis :

One can record all data in an .xdf file with the Lab Recorder,

and analyze offilne in Matlab for example, with eeglab or other toolboxes.

2. Online processing:

You can use openvibe which has LSL already integrated,

or Simulink (working on it)! tutorial soon…

How To:

1. download LSL4Unity (this is a modified version from the original xfleckx )

2. download latest Lab Recorder

3. download Unity,

you would need to have a game already made:

e.g., Rolling A Ball — from the Unity tutorial,

or if you do VR, you would need an environment ready to use,

for HTC Vive for example: from htc-vive-tutorial-unity

4. For this tutorial Windows 7+ is needed (I tested on windows 10)

Once you have downloaded and unzipped all the folders,

I advice you to follow the nice tutorials for Unity before using LSL.

Make a copy of the Unity project you intend to use and rename it

e.g., Roll_a_Ball-LSL (to keep your original project without lsl).

Then copy the LSL4Unity folder in the Assets folder from your Unity project.

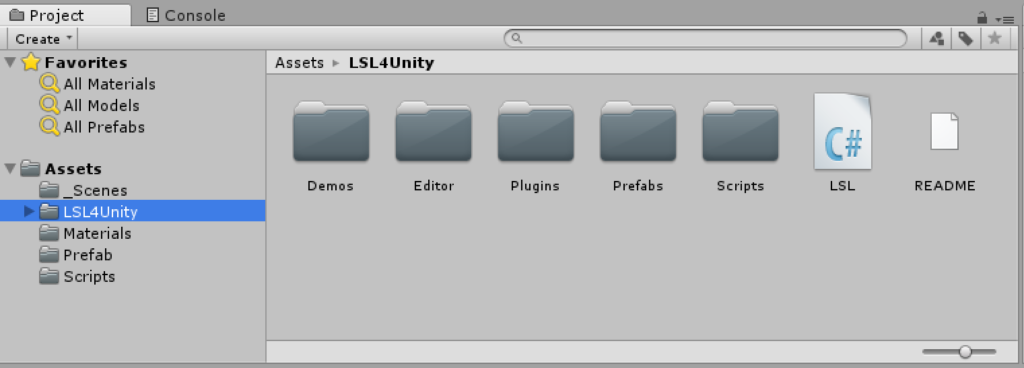

So when you enter your project in Unity it looks like this:

Send data streams from Unity :

(Send data streams to Unity – bellow.)

Send streams from Unity

such as position, rotation of the gameObject or of head and controllers,

- FOLLOW THESE INSTRUCTIONS for Roll a Ball example or an example for VR – HTC Vive

Send Marker streams from Unity

such as collision between game objects etc.

- FOLLOW THESE INSTRUCTIONS for Roll a Ball example or an example for VR – HTC Vive

Receive data in Unity :

Receive streams in Unity

physiological stream which can influence your gameObjects’ positions for instance,

Receive Marker/ events in Unity

such as openvibe stimuli .

==============================================================

Offline recording and syncing data with LabRecorder

-

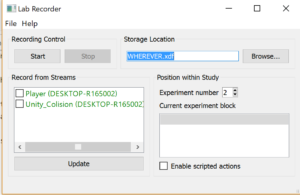

- once you followed the instructions above, start game in Unity (press play)

- open Lab Recorder

(Save the .xfd file in the desired destination and click on Update to see active streams)

- check all the streams you are interested in recording (Marker streams with continuous streams)

and press Start to Record! - Once you are done recording press stop! And it is all synced and recorded in one .xdf file.

To analyze data from this .xdf file you need to have dedicated toolboxes like liblsl and xdf-master toolboxes available for Matlab and Python.

I used eeglab and xdf toolboxes to extract and analyze data in Matlab, but I am switching to Python 🙂